A.I. Part 2: Building An Effective A.I.

As I briefly mentioned in Part 1, the most effective type of artificial intelligence would be one which allows a human being to interact with a machine more naturally than with simple algorithms and point-and-click interfaces. That way, a machine can begin to understand that you are angry and frustrated when it botches its job, and can discern what it takes to more effectively aggravate you in the future. The most common form of AI is very superficial, but the potential is a well-rounded interface much like what is seen in R2-D2 and C-3PO.

A more rudimentary form of artificial intelligence can be observed in current Internet searches. A search engine learns from your previous searches, what you click on, your browser history, and your bookmarks, just what kind of results you are most likely to click on in the future. It tries to hide things which might offend you, get you outside of your comfort zone, or otherwise expand your intellectual horizons. After all, when you’re less comfortable, you’re less likely to buy stuff.

Other machines are built around trying to learn and understand what they’re told. But when their command ranges are limited, there is an inability to function properly when commands are given outside their initial programming. Honda’s Asimo has demonstrated this problem. It tries to interpret commands it doesn’t understand and doesn’t know to either ask for clarification or simply to give up. Engineers are now working on machines which can learn and understand how to better interact with humans.

So how can we make a better artificial intelligence? To understand how to produce the better interface, we should first understand how the human mind works, then try to emulate it. In other words, we need to look at how the human mind and other biological minds develop.

Imagine there are four “minds”. Mind 0 consists of pure reactions and instincts. This can be seen in insects and other simpler life forms. Mind 1 consists of fear, aggression, and other lower emotions. This can be seen in both predators, like big cats, and prey, like rodents. Mind 2 includes happiness, humor, and other higher emotions. Dogs, house cats, and other “playful” animals show this level of mentality. Mind 3 is the logical and rational mind. This can be seen to some extent in most animals, but none so much as primates. We can see this entire development in human babies.

Baby, Mind 0

A little tyke has to relieve both its bladder and its colon. The little one does so in its diaper. It moves its legs and other body parts into a position and performs any necessary grunting to make it happen.

Baby, Mind 1

The infant becomes uncomfortable with its messy diaper. It begins to scream out in such a way which is only slightly more discernible than death metal lyrics. All it wants is to have its diaper changed by somebody who is actually capable of changing the diaper, since it has not yet developed the necessary rational and motor skills to do the job. At this stage, the child isn’t interested in happiness, just the feeling of security.

Baby, Mind 2

When the baby is being changed, it becomes apparent that the kid still has ammunition. During the changing process, the freshly pressed dress shirt of the one changing said diaper suddenly becomes wet. We can clearly observe Mind 2 when the child laughs uncontrollably at dousing the unfortunate diaper changer’s nice, clean dress shirt using nature’s Super Soaker.

Baby, Mind 3

The amused infant recognizes the circumstances of its recent bout of enjoyment, and the conditions needed to reproduce the situation. We then are able to observe Mind 3 as the baby demonstrates its ability to effectively reproduce its own entertainment, at the expense of the just-dressed diaper changer, who is then left with the dilemma as whether the shirt must be replaced with an different, cleaner shirt which must also be ironed and pressed, or if the unfortunate victim of the wet sniper shot should actually be able to get to work on time and have to explain to everyone in the corporate sales meeting, including potential clients, as to why the person’s nice dress shirt has a yellow stain and smells like pee-pee.

The interesting thing about the electronic “brain” is that it’s built strictly on Boolean logic, and therefore, by nature, Mind 3. In order to properly imitate human thought processes in such a device requires working the development process in the exact opposite direction. Pure logic comes first, followed by mimicking the higher emotions, then self-preservation.

Machine, Mind 3

The machine operates on simple, rational logic. Its thinking is not clouded by bias or false perception. The entirety of its thinking is rooted in pure, cold fact.

Machine, Mind 2

The machine is cordial, friendly, and very likely humorous. It can put a person at ease and lift a person’s spirits. This is the ideal for a robot butler.

Machine, Mind 1

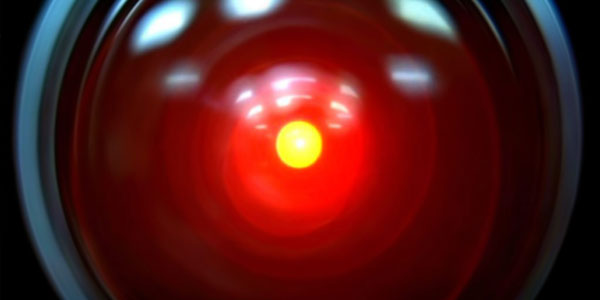

The machine is determined, focused, and often relentless. This consists of what are commonly known as the “negative” emotions. Think of the Droid Army, The Terminator, and Marvin the Paranoid Android.

Machine, Mind 0

This is your basic build-and-weld-a-car-on-the-line kind of machine. Pretty straightforward.

Put it together and what have you got? (No, not Bippity-boppity-boo, you silly person. Focus!) What you get is the complete package for a machine which can truly interact with humans seamlessly. This is when you get classic droids from Star Wars, as well as Lt. Commander Data, Rosie, WALL-E, Twiki, and just about every other lovable piece of scrap hardware science fiction has to offer. They’re machines which can be conversed with, reasoned with, directly commanded, and easily understood.

Substantial efforts have been made and a lot of money spent to create machines with friendly personalities. So far, they’re little more than Algorithmic protocols with voice recognition and speech imitation. But they’re coming along. It may not be too long before a computer can have an actual conversation instead of simply being reactive with pre-programmed responses or giving standardized, and often ambiguous or encoded, replies. Then perhaps instead of being aggravated by faulty pieces of circuitry that you want to throw through the window, you can be aggravated by faulty pieces of conversational and likable pieces of circuitry … that you want to throw through the window.

Up next: Neural Networking –>

![]()