Making Computers Smarter By Making Them Stupider

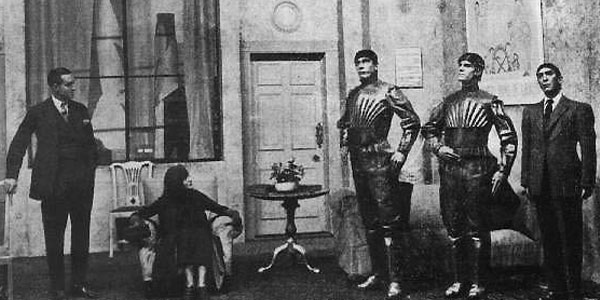

In a lot of classic science fiction, robots are everywhere. While some might be dangerous and out to enslave or kill us, most often they are our butlers, maids, bartenders, nurses, sanitation workers, IRS agents, or other assistants. As technology keeps advancing, developers keep trying to bring that science fiction dream to life. The results have been slow and only moderately impressive so far.

But what if there was some sort of shortcut? What if trying to make computers smarter is keeping them from being smarter? What if the key was to make them somewhat stupider? Bear with me on this.

I was recently at the Maker Faire in Kansas City, one of the larger Maker Faires. I wasn’t that impressed. It seems to be getting smaller every year. As nice as Union Station is, complete with Science City, I think the venue layout is hurting the show the most. And the number of dreamers, with their flying drones, robots, and scientific and engineering wonders are dwindling, giving way mostly to crafters. Some of the usual exhibitions were there, like soldering instruction, the FIRST Robotics competition, and the Power Wheels Racing Series. There were also some great people such as Clearly Guilty (who we featured in a segment on our Planet ComiCon broadcast this year) selling CDs.

Then there was a hidden gem known as Mycroft. (It has the same name as Sherlock Holmes’ brother, but from a different source. And I’m pretty sure this one isn’t played by Mark Gatiss.) Mycroft is a voice interactive A.I. similar to Siri, Cortana, or Echo. But Mycroft is open source. That very nature makes it fairly portable. Built on Linux (and can even be run alone on a Raspberry Pi), various skill modules are constantly being built, not only by those at the Mycroft company, but also by the community at large. And that got me thinking.

Back in the Dark Ages, better known as the Year of Our Lord 2000 A.D., knights fought for honor, witches were burned at the stake, and finding device driver software for Linux was nearly impossible. If you couldn’t write your own drivers, you might as well not use the device at all. BSD users were even more screwed. CUPS (the Common Unix Printing System) eventually helped to solve that problem … to a small extent.

But it got me wondering: what if the driver software was cross-platform (perhaps written in Java) and contained on the device itself? Then, when a computer links up to it by way of USB, Bluetooth, Wi-fi, or other method, it would read the drivers and instantly be able to use the device. One could theoretically even print from a wristwatch! But alas, it never came to be.

Fast forward to something a bit closer to the present day, or more specifically, last year. I began a new job on the lot crew at an auto auction. While driving cars around the lot to their assigned destinations, I noticed something about late model vehicles, mainly those with touch screens. I took notice of their specialized operating systems. Some were within the same brand but used across models such as MyLink, uConnect, StarLink, and Cue. Other cars, like the Chrysler 200 and 300 have custom opening splash pages, though I’m not sure about the operating software itself (possibly a uConnect variant).

I also took note of some of the features that those systems control, including radio, phone, navigation, climate, cameras, and so on. What if such systems were made to be modular? What if each of those functions were add-on modules that are read by the base system? What if more features could be added simply by adding new modules with their own drivers (software, not the person driving the car), sort of like a micro distributed network?

This is hardly a new idea by any stretch. Consider the human brain. It’s segmented into lobes, and further divided into sections which control various functions of not only thought, but also bodily function. The division of tasks makes everything run more efficiently. Even personal computers have used this with graphics and sound designated to specialized processors. Granted, they have tended to require specialized drivers as well that don’t work with all operating software. But that’s beside the point.

Now conversely, consider daemons, System Agents, and other processes constantly running in the background on your computer. (Windows users can open “Task Manager” to see all of the non-hidden ones currently running.) Each one takes up clock cycles and reduces the amount of flops (floating point operations, or mathematical calculations) available. More and more commands, apps, and other programs are added, one on top of another, burdening and slowing the central processing unit (CPU), and occasionally causing errors. The increased “smartness” slows down and interferes with the actual “smartness.”

While a lot of software processes cannot be eliminated without disabling the programs they are tied to, it would be nice to eliminate a lot of the excess device functions connected with devices that aren’t connected. I’m not necessarily promoting switching to a microkernel like MINIX3 (no matter how freaking sweet the MINIX3 self-repairing ability is). But minimizing processor use to what is used by relevant devices maximizes the clock cycles for use with other functions.

It doesn’t just apply to personal computers and similar devices, like those in cars. It also translates over to robotics and robotic devices, including cars. Imagine a simple robotics computer which has little to no capability except what is added on to it by components. Then, providing any component can use either the robot’s command or power-and-command ports (like USB), that robotic computer can instantly start using the component. The component handles the fine processing details while the main unit conducts the symphony. This applies to both sensors and operative devices, like arms and legs. And perhaps doors, lights, and so on, like in larger vehicles or even spaceships and space stations, though hopefully without the psychosis of the HAL 9000.

This is where Mycroft comes in.

Mycroft uses programmed “skills” that can be installed by the user. In fact, a user can create new skills for Mycroft. It’s open source after all. Perhaps devices can provide their own skills for the A.I. to utilize. Perhaps there can even be a “learn” skill that allows devices to teach it commands, not complete skills, that carry out the operations of said devices. For example, a “WALK” command, with a few parameters, can be used for a pair of legs, or a “MOVE” command if it uses wheels like R2-D2 or tank treads like Johnny 5. The component itself would be able to process the details of the operation itself, freeing up the main processor for other uses.

By distributing the processing for the operations, the system overall can run faster and more efficiently, with each processor having a dedicated task. Make the main processor stupider and the entire system becomes smarter. All with a friendly voice interface.

![]()