A.I. Part 4: When Machines Are People Too

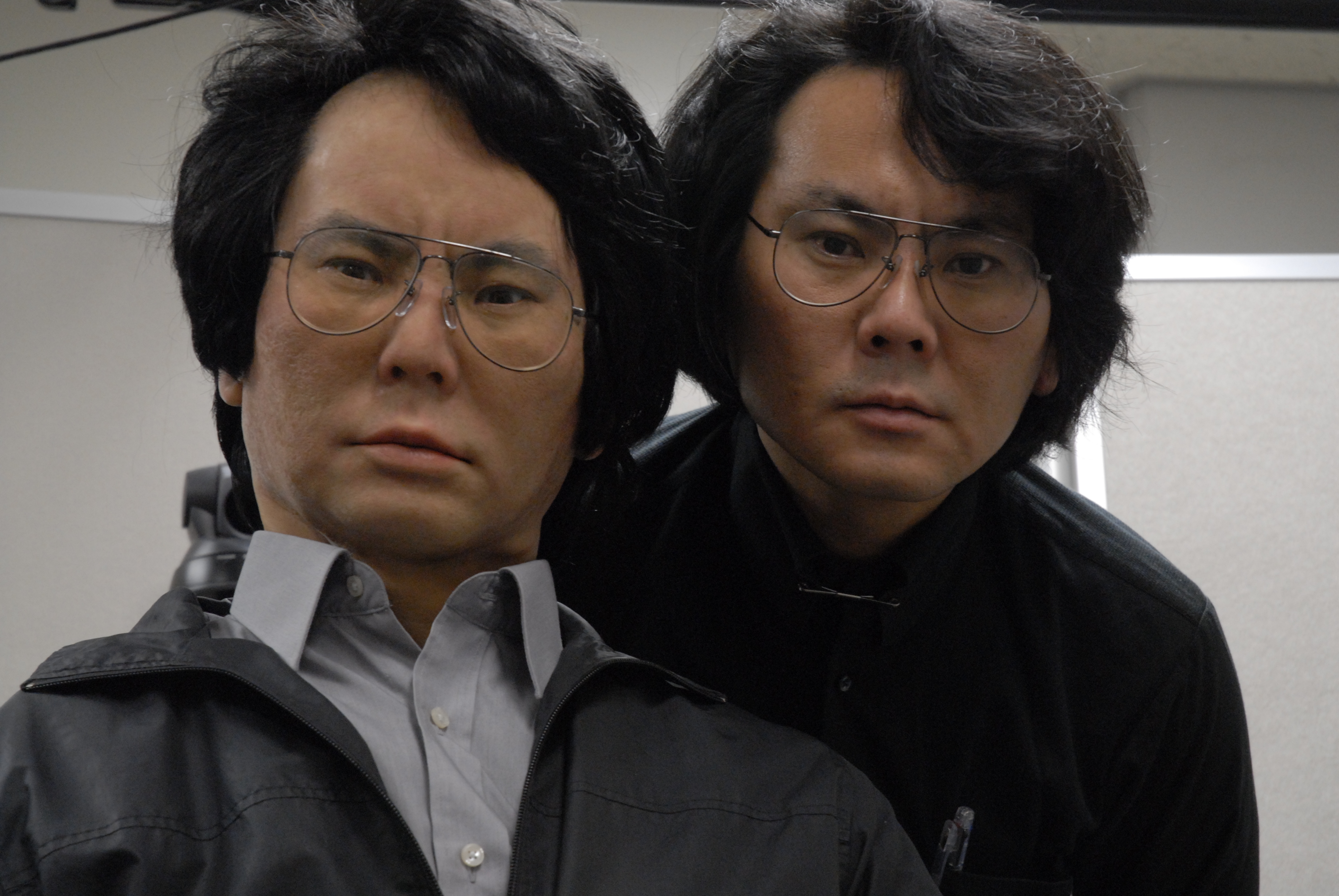

[Featured Image: Osaka University]

Sometimes, there are more to machines than meets the eye. No, I’m not talking about Transformers, regardless of how truly awesome Optimus Prime is. I’m talking about ethical implications as well as characteristics of machines which go beyond the programming and hardware. What rights should an intelligent machine have? And at what point is a machine self-aware? It’s like Johnny 5 or something straight out of an Isaac Asimov story.

Some scientists and futurists talk about the coming Technological Singularity, a point where machines become smarter than humans and human intelligence can be enhanced by machines well beyond its normal capability. “Singularity” is an interesting word. Whenever scientists use the word “singularity”, it means, quite literally, “I don’t know”. When a singularity is referred to, it just means that “something happens here”. Words like singularity are used so that even when scientists are complete idiots about something, at least they’ll sound like they know what they’re talking about. In this case, the term is used to signify that, since the overall intelligence is beyond current human capabilities, we can’t begin to fathom what will happen to the world around us when machines become smarter than we are, or even self aware.

We’ve seen it all over science fiction, from I, Robot to The Terminator. But what exactly is “self awareness”? There’s not any real agreement. And some of the testing methods are poorly thought out. Take the “Mirror Test” for example. The idea behind it is that if an animal can look in a mirror and recognize itself, then it is “self aware”. But being able to recognize itself does not mean that it can ponder its own existence or come to terms with its own mortality. It just means that one is smart enough to recognize one’s own appearance, and that mirrors are reflections, not additional realities. It is not even close to what I would refer to as self awareness. Robots can be taught to do this, but that doesn’t mean that it constitutes “self awareness”.

I would contend that true self awareness is largely the ability to pick one’s own destiny. It is the ability to realize one’s own mortality and ponder its own existence. It is the ability to create one’s own path, often in spite of one’s expected or intended purpose. Simply carrying out tasks as expected constitutes nothing more than running a program. But to pick one’s own direction and purpose in this world is something no amount of fuzzy logic circuits can accomplish. It’s one of the primary things that make us human. Somebody’s body can be built in such a way as to make a great athlete. But if that person wants to paint pictures of polka dancing garden gnomes wearing bedpans has hats instead, that person could certainly do that. It would be stupid, but that person could do it anyway.

I also believe that an important factor is the uniqueness and how irreplaceable something is. I’m not talking about the ability to move something else in to handle a particular job in a being’s absence. Even humans can be easily replaced in this manner. What I’m referring to is the inability to reproduce the apparent consciousness of a machine. Typically, everything which makes up a computer can be easily reproduced. This includes not only the hardware, but also the operating system, installed software, and some personal files. But what if a computer crashes, gets infected by a virus, or is dragged through puddles by an overzealous dog who wants to bury said computer in the back yard without the personal files being backed up? Important files could very well be lost forever.

Now what if the information was built on experience? How can one reproduce the same experiences? The simple fact is it can’t happen. This is not exactly the same as with humans, but it’s close. Much of a human’s personality is genetic, and therefore is present as soon as a sperm fertilizes an egg. The ability to express that personality comes with physical maturity (much of it is apparent well before birth), and it is developed and expanded through unique personal experience. The result is the human personality as we know it (with the possible exception of Al Gore). A computer, on the other hand, is comprised of circuits and processors. If the necessary processing, data storage capacity, and sensors are present and in full working order, then its ability to analyze, process, and act can be built and refined through experience. It may not be as deeply unique or ingrained as with personal genetic structures. But it would constitute a what we know as a personality nonetheless.

So if we take into account the level of self awareness and uniqueness of a machine, then at what point does cybercide equate to homicide? And at what point does a mechanism deserve the same rights as an organism? Should the penalties of denying those rights to such a machine be the same as denying those rights to a human? There are no implications quite as profound as whether or not a machine can be considered an individual.

So the measure of a machine to bear the rights of a human, be treated as equal to humans, and to be defended by law as a human should be based on the following criteria: Sentience, uniqueness, and self awareness. If a machine can show, beyond a reasonable doubt, signs of true emotion, is uniquely an individual based on its personal development acquired through its experience, and can, on its own, behave beyond its initial designed expectation, then that machine is deserving of a status equivalent to a human. That means terminating its existence is no longer an act of vandalism or property damage. That level of cybercide would be no different from homicide. A machine with such status would also be worthy of the right to vote and hold office. Just beware of a cyberocracy.

So when a machine can gain the sentience and thought capability which is equal or greater to a human, then it doesn’t even have to be a Transformer in order to be more than meets the eye. Ethically, we would have to do what is now unthinkable. We would have to share our rights with computers! That would be a day in which we all can honestly proclaim that toasters are people too.

[Read Part 1] [Read Part 2] [Read Part 3]

![]()